What Is GCP and How Can You Use It for Your Business?

Google Cloud Next 2025: All Eyes on AIs

There were over 10 keynotes and spotlight sessions at Google Cloud Next 2025, along with 700 deep-dive presentations. The event was packed, covered every angle of innovation, and offered a clear snapshot of Google Cloud’s newest additions and strategic focus.

Now that we’ve had a chance to absorb everything and some time to reflect, let’s take a closer look at each key area and highlight the most impactful announcements from Google Cloud Next ’25.

Artificial Intelligence and Multi-Agent Systems

With the public preview of Gemini 2.5 Pro—currently topping the Chatbot Arena rankings—and the upcoming Gemini 2.5 Flash, which is built for speed and efficiency, more people now have access to powerful tools for reasoning and coding.

On top of that, Google Cloud has made Vertex AI the only hyperscaler platform offering generative media models across video, images, audio, and music. That’s thanks to Imagen 3, Chirp 3, Lyria, and Veo 2, plus Meta’s Llama 4 (now generally available) and a new partnership with Ai2.

Why It Matters: This positions Vertex AI as a top-tier platform for building multimodal AI applications. Developers and businesses get an unmatched mix of options and tools to work with a wide range of data types—something that clearly sets Google Cloud apart in the artificial intelligence space.

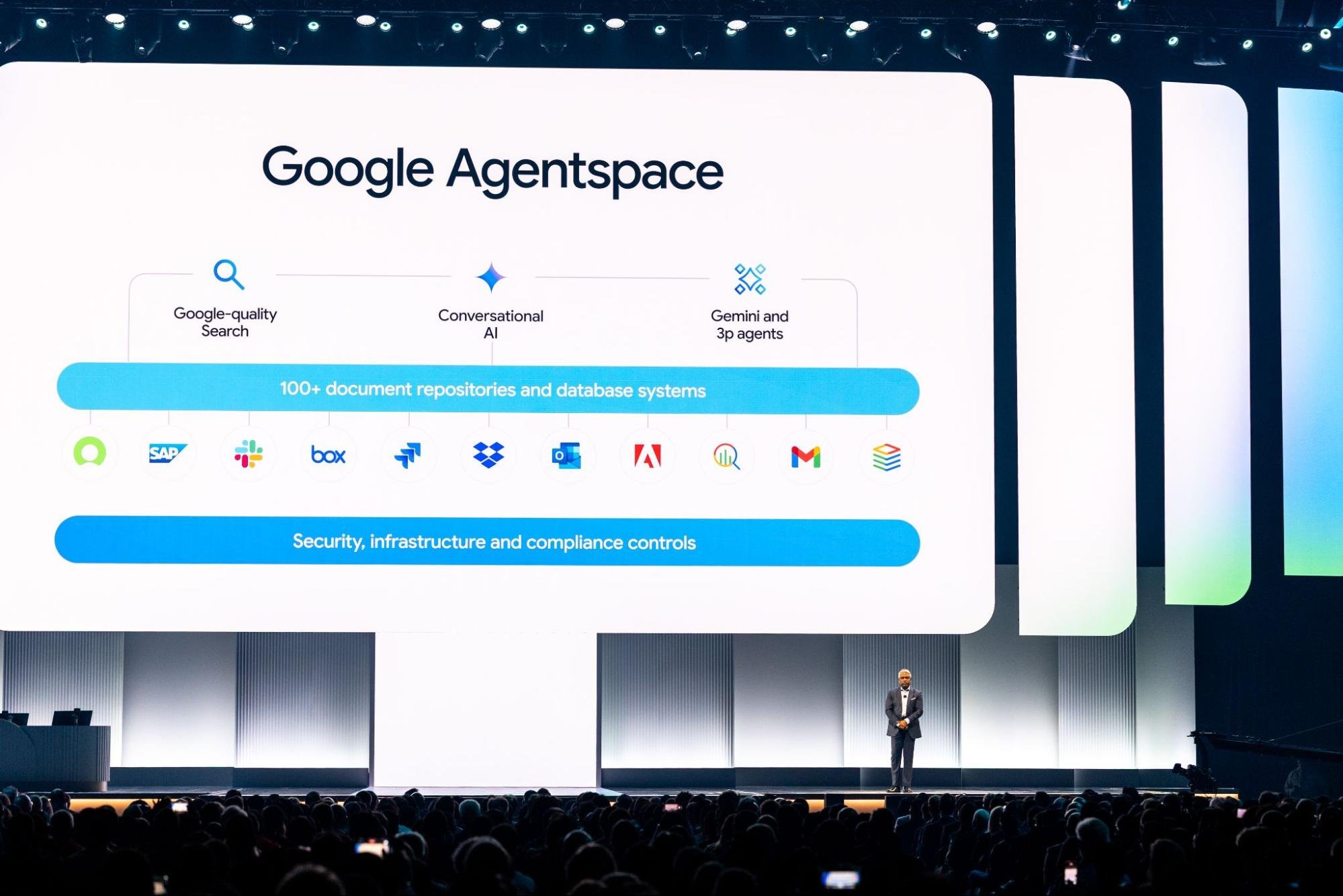

Google Cloud also introduced the Agent Development Kit (ADK) and the open Agent2Agent (A2A) protocol, which already has support from dozens of partners. These tools lay the groundwork for building and managing complex systems of interacting AI agents.

The new Agent Engine adds a fully managed environment for testing and deploying these systems at scale. Tools like Agentspace—now connected to Chrome Enterprise—along with Agent Gallery and a no-code Designer, are built to help companies roll out AI agents across teams and departments, putting the power of artificial intelligence into the hands of everyday users.

Why It Matters: This marks a clear shift toward helping businesses not just explore AI, but put it to work. Open standards bring the community in, while managed services and user-friendly tools lower the barrier to entry. That means more organizations can use AI for high-impact work like research, strategy, and innovation—without needing a team of engineers to make it happen.

Source: cloud.google.com

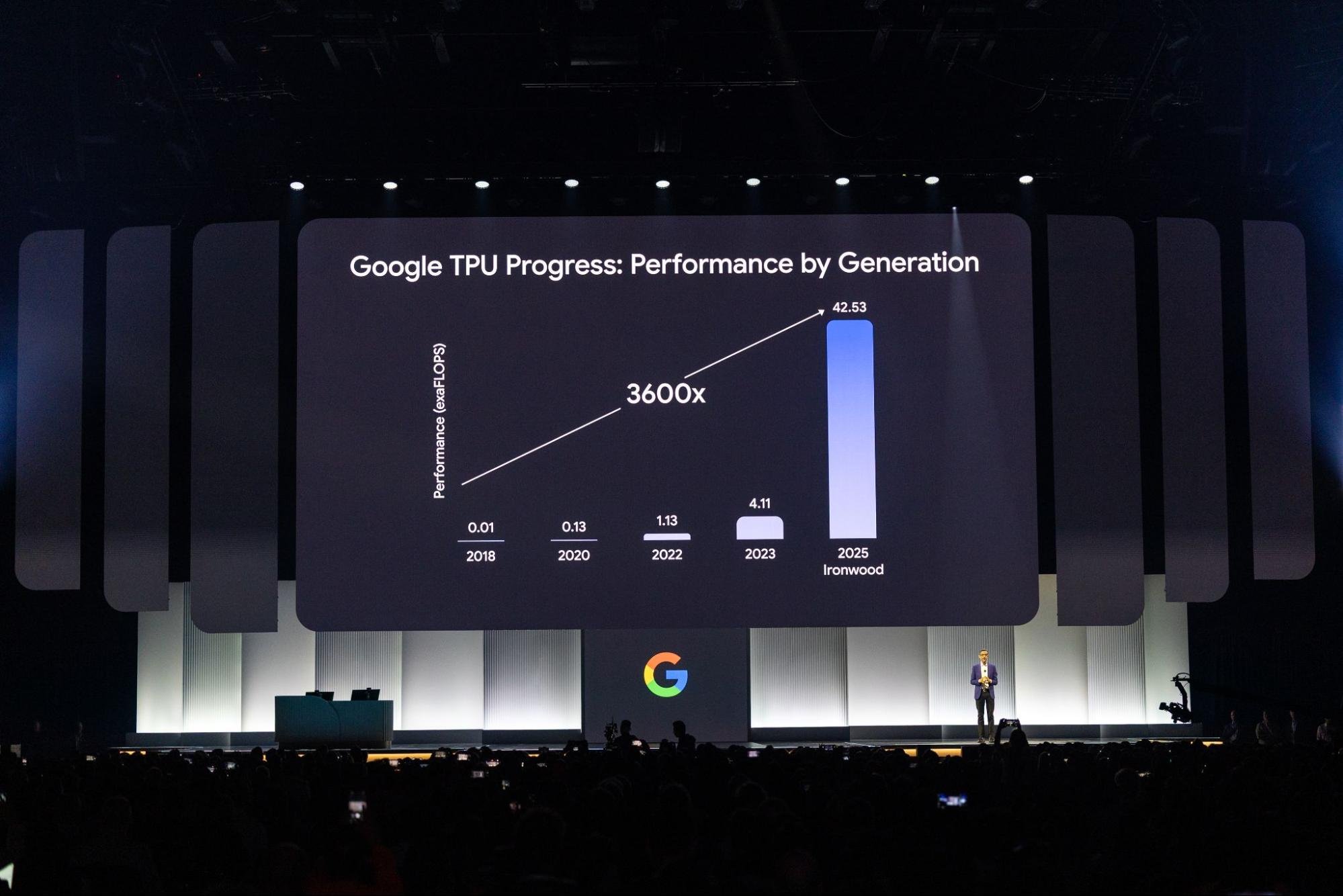

Artificial Intelligence Infrastructure

The upcoming Ironwood (Google’s 7th-generation TPU) and the launch of A4 and A4X virtual machines—powered by NVIDIA’s Blackwell GPUs (B200 and GB200)—showcase Google Cloud’s push to stay at the front of AI hardware innovation.

Google Cloud was the first major provider to offer these Blackwell GPUs and is already lined up to be among the first to offer NVIDIA’s Vera Rubin chips as well. For the first time, customers also have access to Pathways on Cloud, DeepMind’s distributed runtime built to handle large, complex AI models.

Why It Matters: These updates give users massive computing power and efficiency for training and running large-scale models. That’s essential as the demand for serious AI horsepower grows. And by getting to market early with top-tier NVIDIA GPUs, Google Cloud adds a key advantage for customers who need high-performance systems now—not later.

Google Distributed Cloud (GDC) is teaming up with NVIDIA to bring Gemini models to Blackwell-powered systems, including those in on-premises environments. Cluster Director (formerly known as Hypercompute Cluster) simplifies how organizations deploy and manage groups of accelerators, treating them as a single, unified system.

Coming soon: Cluster Director for Slurm and full-circle observability features. Add to that support for networking up to 30,000 GPUs in a non-blocking setup and secure RDMA with Zero Trust, and you’ve got a system built for scale.

Why It Matters: Running large AI workloads isn’t just about raw power. These tools help enterprises deploy artificial intelligence wherever they need it—on-prem, hybrid, or otherwise—while making sure those systems are secure, efficient, and easier to manage at scale.

Source: cloud.google.com

Application Development

Gemini Code Assist agents are now available to handle everyday developer tasks like code migration, writing new features, reviews, testing, and documentation. They’re built right into Android Studio and even into a new Kanban-style board for easier project tracking.

In Firebase Studio, a new App Prototyping agent can take app ideas and turn them into working prototypes in no time.

On the infrastructure side, Gemini Cloud Assist speeds up the design and deployment process. It also helps troubleshoot issues and is tightly integrated across Google Cloud services, including the latest FinOps Hub 2.0.

Why It Matters: These AI assistants cut down on development time, take the pressure off operations, and give teams more bandwidth for higher-level work. By integrating artificial intelligence directly into the tools developers already use, Google Cloud is helping make app development faster, smoother, and more cost-efficient.

The new Application Design Center offers a visual, hands-on way to create and update application templates. Cloud Hub acts as the central dashboard for everything from deployments and system health to performance tuning and resource use.

App Hub ties together over 20 Google Cloud products to give teams a clear, app-first view of their environments. And thanks to a brand-new Cost Explorer (currently in private preview), you can now dig deep into app-level spending and usage metrics.

Why It Matters: For large organizations working across complex systems, these tools bring much-needed clarity. With better visibility into how apps are built, run, and costed, teams can make smarter governance decisions.

Firebase is stepping up as a go-to platform for developers building AI-powered apps, with a fresh set of tools aimed at speeding up development and improving quality. The new Firebase Studio is a cloud-based, AI-first workspace built around the Gemini platform.

It’s designed to help developers quickly spin up and ship full-stack, production-ready AI apps. Gemini Code Assist agents are baked right in; plus, Firebase now supports the Live API for Gemini models via Vertex AI, enabling real-time conversational capabilities right inside your apps.

Why It Matters: Firebase Studio brings together everything needed to build modern, AI-driven apps—fast. With Gemini integrated at every step and support for live, dynamic interactions, it’s easier than ever to create engaging user experiences.

On the quality front, a new App Testing agent in Firebase App Distribution (currently in preview) helps prepare mobile apps for launch by generating and running full end-to-end tests automatically.

Meanwhile, Firebase App Hosting is now generally available. It offers a Git-based workflow tailored for building and deploying full-stack web apps.

Why It Matters: These additions help developers catch issues earlier, improve app stability, and ship updates faster. Hosting is tightly integrated and opinionated in the right ways—perfect for teams that want to focus on building without wrangling infrastructure.

Cloud Run & SaaS Runtime

Cloud Run GPUs are now fully available, letting you run GPU-intensive workloads in a serverless setup. Plus, the public preview of multi-region deployments for Cloud Run boosts app resilience, and a private preview of worker pools for Kafka consumers adds support for pull-based workloads.

In the meantime, SaaS Runtime offers a complete lifecycle management solution to help SaaS providers model, deploy, and operate their services with little to no effort, with built-in tools to quickly add artificial intelligence capabilities.

Why It Matters: These updates expand what Cloud Run can handle, making it a solid choice for demanding, mission-critical apps—like AI and ML inference—while improving reliability and event-driven architecture support. In turn, SaaS Runtime cuts down development and operational headaches.

Compute

The new C4D VMs, powered by AMD EPYC processors and Google’s custom Titanium architecture, deliver up to 30% better performance than previous generations.

C4 VMs, built on Intel’s Granite Rapids chips, push even further with the highest clock speeds of any Compute Engine VM—up to 4.2 GHz—and faster Local SSD performance.

For heavy-duty HPC use, the H4D VMs offer top-tier whole-node and per-core speed, along with industry-leading memory bandwidth.

Why It Matters: These VMs give customers more flexibility. Whether you’re running intense simulations, optimizing I/O-heavy processes, or just need reliable general compute, these new families offer the performance you need—without overspending.

Google Cloud is also introducing new VMs tailored for specific enterprise needs. The M4 series, certified for mission-critical SAP HANA deployments, offers up to 65% better price to performance.

The Z3 family, focused on storage-heavy workloads, now includes larger shapes and Titanium SSDs—plus bare-metal options that scale up to 72TB.

On top of that, the Titanium ML Adapter integrates NVIDIA ConnectX-7 NICs to deliver 3.2 Tbps of GPU-to-GPU bandwidth. Titanium offload processors connect those GPU clusters to Jupiter, Google’s high-speed data center fabric.

Why It Matters: These specialized VMs are built for jobs that can’t afford slowdowns. From enterprise databases to AI model training at scale, they’re optimized for speed, reliability, and performance. The upgraded Titanium family, in particular, helps support the massive data movement and scalability that modern artificial intelligence demands.

Containers & Kubernetes

- GKE Inference Gateway adds smart scaling and load balancing tailored for generative artificial intelligence models.

- GKE Inference Quickstart automates the nuts and bolts of setting up your AI infrastructure.

- RayTurbo on GKE, built with Anyscale, speeds up data processing by 4.5x and cuts node requirements in half.

Why It Matters: These tools take the heavy lifting out of deploying and managing inference workloads, letting you serve AI models at scale while saving both time and money. RayTurbo’s big efficiency gains are especially valuable for data-hungry pipelines.

- Cluster Director for GKE (now GA) lets you treat large fleets of GPU-powered VMs as a single, easy-to-manage unit.

- GKE Autopilot just got quicker pod scheduling and scaling. Its container-optimized compute platform is also coming soon to standard GKE clusters.

Why It Matters: These updates boost performance, improve operations, and trim costs—crucial for big AI jobs and other demanding workloads. Faster, smarter Autopilot makes managed Kubernetes an even more compelling option for teams of every size.

Customers

Google Cloud spotlighted hundreds of new customer stories from around the world, showing how organizations of all kinds are putting its AI tools to work.

- Bending Spoons processes a staggering 60 million photos a day with Imagen 3.

- DBS cut call handling times by 20% using the Customer Engagement Suite.

- Nevada DETR uses BigQuery and Vertex AI to power an Appeals Assistant that helps Referees approve benefits four times faster.

- Mercado Libre launched Vertex AI Search in three pilot countries, helping 100 million users find products faster and generating millions in additional revenue.

- Spotify uses BigQuery to personalize experiences for more than 675 million users worldwide.

- Verizon improves customer service for over 115 million connections with Google Cloud’s AI tools, including the Personal Research Assistant.

- Wayfair updates product attributes five times faster with Vertex AI.

Why It Matters: These stories aren’t just impressive—they’re proof of how widely Google Cloud’s artificial intelligence is being adopted. From speeding up operations and boosting creativity to improving customer service and driving revenue, businesses are seeing real results. It’s clear that AI isn’t just a buzzword anymore—it’s a tool that’s changing how industries work.

Databases

AlloyDB AI now supports natural language querying of structured data, letting developers use plain English alongside SQL. It also features improved vector search with cross-attention reranking, multimodal embeddings, and a new Gemini Embedding model for richer text understanding.

On top of that, the MCP Toolbox for Databases makes it easier to connect AI agents directly to enterprise data systems.

Why It Matters: These upgrades help break down the barrier between users and their data. Natural language support opens up database access to more people, and better vector search boosts performance for AI tasks like semantic matching. The ability to link AI agents to databases is a key enabler for building smarter enterprise tools.

Firestore now offers MongoDB compatibility with multi-region replication, strong consistency, and a 99.999% SLA—giving developers a familiar interface with enterprise-grade reliability.

Google Cloud has also expanded its Oracle offerings with the new Oracle Base Database Service and the general availability of Oracle Exadata X11M, providing more flexibility and control for Oracle workloads.

Meanwhile, Database Migration Service (DMS) now supports migrations from SQL Server to PostgreSQL, and the new Database Center, now generally available, offers a centralized, AI-powered control plane for managing database fleets across the board.

Why It Matters: These updates are a big win for enterprise customers. Whether migrating from legacy systems or optimizing mission-critical workloads, teams now have more options, better performance, and clearer paths forward. MongoDB compatibility makes Firestore a compelling choice for a huge base of developers, and expanded Oracle support fills a major need for large organizations.

Source: cloud.google.com

Data Analytics

BigQuery continues to evolve into a truly autonomous platform that blends analytics and AI from start to finish. At the heart of this evolution is the BigQuery Knowledge Engine, which uses Gemini to understand schema relationships, model data, and even suggest glossary terms.

Meanwhile, the new BigQuery AI query engine can handle both structured and unstructured data, drawing on Gemini’s real-world knowledge and reasoning.

A host of new features—like built-in pipelines, data prep, anomaly detection, semantic search, and contribution analysis—are now either generally available or in preview.

Why It Matters: These upgrades put artificial intelligence directly into the data workflow, allowing users to move from raw data to insights faster than ever. Whether you’re spotting anomalies, preparing data, or asking complex questions, BigQuery now helps you do it more intuitively—and with less manual work.

BigQuery also now supports multimodal tables, treating unstructured data as a first-class citizen.

The Universal Catalog (formerly Dataplex Catalog) offers a unified metadata layer, with metastore interoperability across engines and a business glossary for stronger governance.

New continuous queries allow near-instant analysis on streaming data, and managed disaster recovery—now generally available—brings automatic failover and near real-time replication to keep data protected and available.

Why It Matters: These updates reinforce BigQuery’s readiness for complex enterprise environments. Whether it’s real-time analytics, open ecosystem support, strict governance, or disaster resilience, BigQuery is now even more equipped to handle large-scale, mission-critical workloads.

Looker is getting a major upgrade too, with new features that bring business intelligence closer to everyday users. Conversational analytics (currently in preview) lets business users explore data simply by asking questions in natural language.

Gemini in Looker now powers features like Visualization Assistant, Formula Assistant, and Conversational Analytics, available to all Looker users. There’s even a Code Interpreter that handles tasks like forecasting and anomaly detection—no need for Python expertise.

Why It Matters: This lowers the bar for data analysis, making powerful insights available to people across the org—not just data teams. It’s a big step toward broader data literacy and faster, more confident decision-making.

Google Workspace: AI-Powered Productivity

- Google Sheets now includes Help me Analyze, which smartly pulls insights from your data without needing you to ask.

- In Docs, Audio Overview turns documents into clear, natural-sounding audio or podcast-style summaries.

- Meanwhile, Google Workspace Flows automates everyday tasks like managing approvals, digging up customer info, and summarizing emails.

Why It Matters: These AI-powered tools are built right into the apps you use every day, helping you work smarter, surface insights, and bring repetitive work to a low. The result? Big boosts in productivity and a fresh way for teams to interact with their data and documents.

Networking

Google Cloud is scaling its infrastructure to meet the extraordinary demands of today’s largest AI models—and the services built on them.

New networking support for up to 30,000 GPUs per cluster in a fully non-blocking setup, combined with Zero-Trust RDMA security and ultra-fast 3.2 Tbps GPU-to-GPU RDMA networking, removes a major performance barrier.

The introduction of 400G Cloud Interconnect and Cross-Cloud Interconnect further boosts bandwidth, offering 4x the throughput of previous generations.

Why It Matters: These upgrades are critical for training and running enormous AI models. With this kind of networking muscle, developers and enterprises can build and deploy AI systems that simply weren’t feasible before—without worrying that the infrastructure will hold them back.

Google Cloud is also strengthening its backbone for global connectivity and security. Cloud WAN now delivers a fully managed, high-performance network that reduces the total cost of ownership by up to 40%, while improving speed and reliability for distributed enterprise environments. On the security side, updates include:

- DNS Armor, which detects data exfiltration attempts.

- Hierarchical policies in Cloud Armor for more precise security controls.

- Cloud NGFW with Layer 7 domain filtering.

- Inline network DLP, offering real-time protection for sensitive data in transit.

Why It Matters: Whether you’re managing a hybrid setup or scaling across multiple clouds, these capabilities help enterprises stay fast, connected, and secure. The performance gains and stronger security posture are essential for protecting modern applications from increasingly complex threats.

Source: cloud.google.com

Security

Google Unified Security pulls everything into one place—visibility, threat detection, AI-powered security ops, continuous virtual red teaming, the enterprise browser, and Mandiant’s know-how.

Two new AI agents are stepping up the game: the Alert Triage Agent digs into alerts, investigates on the fly, gathers all the context, and makes a call on what’s going on.

The Malware Analysis Agent examines suspicious code and can even run scripts to unpack tricky, obfuscated threats.

Why It Matters: This all-in-one setup, powered by smart automation, makes security teams’ jobs easier, speeds up how quickly threats get spotted, and improves how accurately they get handled—while cutting down on manual work.

The Risk Protection Program (think discounted cyber insurance) now includes new partners like Beazley and Chubb, giving businesses more options.

The Mandiant Retainer offers on-demand access to top experts when investigations or threat intel are needed. And Mandiant Consulting’s partnerships with Rubrik and Cohesity help businesses bounce back faster with less downtime and lower recovery costs after an attack.

Why It Matters: Together, these make cyber risks less daunting—giving companies solid financial protection and expert backup when they need it most.

Storage

Hyperdisk Storage Pools have grown fivefold to 5 PiB, while the brand-new Hyperdisk Exapools deliver the largest and fastest block storage available in any public cloud—think exabytes of capacity and terabytes per second in speed.

Then there’s Rapid Storage, a new zonal Cloud Storage bucket with lightning-fast random read/write latency under 1 ms, data access that’s 20 times quicker, and throughput hitting 6 TB/s. Anywhere Cache cuts latency by up to 70% by keeping data close to GPUs and TPUs, speeding up artificial intelligence processing.

Plus, Google Cloud Managed Lustre offers a fully managed, high-performance parallel file system that can handle petabyte-scale workloads with ultra-low latency and millions of IOPS (input/output operations per second).

Why It Matters: These upgrades smash through data bottlenecks that can slow down AI training and inference, delivering huge boosts in storage speed, size, and responsiveness. The result? Faster model development and quicker rollout of AI-powered solutions.

Google Cloud also launched Storage Intelligence, the first service to use large language models to analyze object metadata at scale and deliver environment-specific insights.

Why It Matters: This lets teams manage their storage smarter by offering clear visibility and control over huge data estates, helping make better decisions around data placement, slashing costs, and staying compliant.

Startups

Google Cloud is stepping up its support for early-stage startups, especially those focused on artificial intelligence. Through a major partnership with Lightspeed, AI portfolio companies can now get up to $150,000 in cloud credits—on top of what’s already available through the Google Cloud for Startups program.

On top of that, startups get an extra $10,000 in credits specifically for Partner Models via Vertex AI Model Garden, encouraging hands-on experimentation with AI models from Anthropic, Meta, and more. The new Startup Perks program also opens doors to preferred solutions from partners like Datadog, Elastic, and GitLab.

Why It Matters: These initiatives make it way more accessible for startups to build and grow on Google Cloud. Offering generous credits, partner tools, and a variety of AI models, Google Cloud is helping fuel innovation and solidifying its spot as a go-to platform for rising tech companies.

About Us

As a trusted Google Cloud Premier Partner, Cloudfresh has empowered over 200,000 professionals in more than 70 countries. Our Google Cloud consulting services connect you with a team of certified experts deeply versed in Google Cloud’s tools, technologies, and best practices—allowing you to focus on your core business while maximizing the value of your cloud investment.